High Availability Autoscaling Openshift

To set up a multi-node-HA Mosquitto broker and Management Center with autoscaling using Helm charts, you'll first need a running Openshift OKD cluster. Openshift offers lot of different features on top of Kubernetes.For deploying a full fledged OKD cluster, you can follow the official Openshift OKD installation documentation. OKD can be mainly installed in two different fashion:

- IPI: Installer Provisioned Infrastructure

- UPI: User Provisioned Infrastructure

Installer Provisioned Infrastructure: Installer Provisioned Infrastructure (IPI) in OKD/OpenShift refers to a deployment model where the installation program provisions and manages all the components of the infrastructure needed to run the OpenShift cluster. This includes the creation of virtual machines, networking rules, load balancers, and storage components, among others. The installer uses cloud-specific APIs to automatically set up the infrastructure, making the process faster, more standardized, and less prone to human error compared to manually setting up the environment.

User Provisioned Infrastructure: User Provisioned Infrastructure (UPI) in OKD/OpenShift is a deployment model where users manually create and manage all the infrastructure components required to run the OpenShift cluster. This includes setting up virtual machines or physical servers, configuring networking, load balancers, storage, and any other necessary infrastructure components. Unlike the Installer Provisioned Infrastructure (IPI) model, where the installation program automatically creates and configures the infrastructure, UPI offers users complete control over the deployment process.

You are free to choose your own method among the two. You can also choose the cloud provider you want to deploy your solution on. Openshift OKD supports number of different cloud providers and also gives you an option to do bare-metal installation. In this deployment we went forward with UPI and deployed our infrastructure on Google Cloud Platform (GCP) using the Private cluster method mentioned here. Therefore, this solution is developed and tested on GCP, however it is unlikely that basic infrastructure would differ across different cloud providers.

A private cluster in GCP ensures that the nodes are isolated in a private network, reducing exposure to the public internet but again you are free to choose your own version of infrastructure supported by Openshift OKD. We will briefly discuss how the infrastructure looks like in our case so that you can have a reference for your own infrastructure.

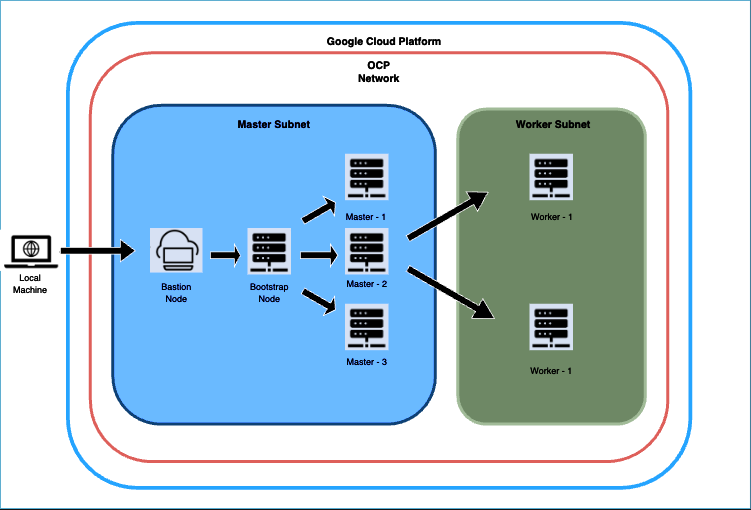

Figure 1: OKD Infrastructure on GCP during provisioning

The diagram depicts the deployment process for our OCP cluster on GCP, starting with the establishment of a bastion host. Bastion host is where we'll execute commands to configure the bootstrap node, then the Master nodes, and finally, the worker nodes in a separate subnet. Before initiating the bootstrap procedure, we set up the essential infrastructure components, including networks, subnetworks, an IAM service account, an IAM project, a Private DNS zone, Load balancers, Cloud NATs, and a Cloud Router.

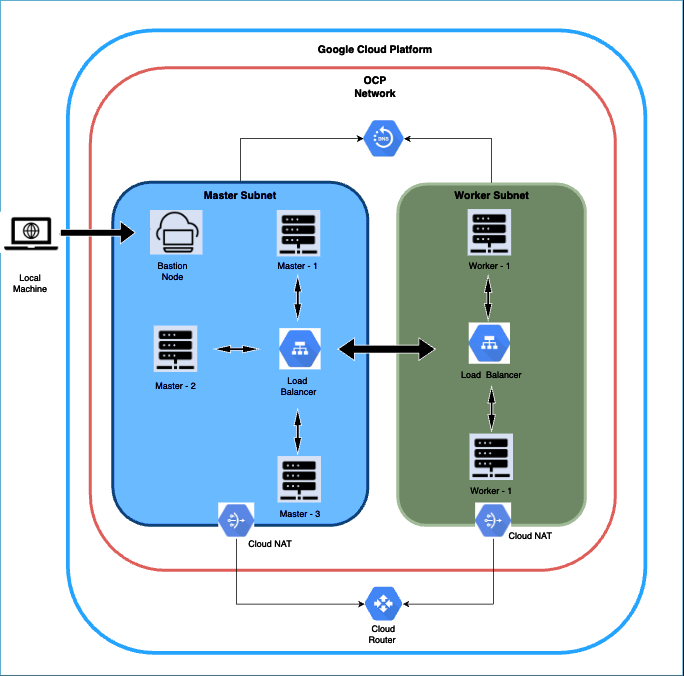

Upon completing the bootstrap phase, we dismantled the bootstrap components from the cluster. Subsequently, we focussed on creating the worker nodes. After the worker nodes are operational, we set up a reverse proxy on the bastion host to facilitate local access to the OCP Console UI through our browser. To conclude, we confirm that all cluster operators are marked as ‘Available’. Once we done with the provisioning the architecture would look something like Figure 2. More detailed steps can be found in the official documentation. These discussed steps are all part of the official documentation.

Figure 2: OKD Infrastructure on GCP post provisioning

Figure 2: OKD Infrastructure on GCP post provisioning

Note: This deployment involves setting up a private cluster, which means access to the cluster is limited to through the bastion host. Consequently, we avoid using public DNS for this installation, relying solely on a private DNS zone. To facilitate access to the external UI, we will employ a reverse proxy for this purpose.

We will also provision a NFS server in the same subnet as worker node. Therefore, this setup would deploy a 3 Mosquitto broker as a statefulsets, a Management-Center pod and HA-proxy pod as a deployment entity. These statefulsets and deployment pods would mount volumes from the NFS server. You would need to setup the NFS server before using this deployment.

Why Auto-scaling ?

When we deploy the Kubernetes, by default we start with 3 Mosquitto Pods, 1 MMC and 1 HA. However, we might run into problems if we have a lot of incoming requests and connections causing overload at Mosquitto brokers, especially in DynSec mode. We would want the setup to adjust based on the load to avoid crashes and maintain system requirements and at the same time avoid any need of human monitoring and intervention.

How does Auto-scaling works?

On deploying the above setup we also deploy certain other helper pods that takes care of Auto-scaling. For eg:

Metrics Server:This server pod monitors metrics of the deployed applications pods. Metrics could be CPU, Memory etc.Horizontal Pod Scaler (HPA):HPA automatically scales up or down the pods based on the threshold. For eg: If the CPU threshold is set to 60%, and of overall CPU consumption across all pods reaches 60%, HPA scales up the Mosquitto pods.Cluster-operator:This pod keeps tracks of pod scaling and triggers the requests to MQTT APIs so that newly scaled pods gets added to internal cluster of Mosquitto brokers. For eg If the current number of Mosquitto brokers are 3 and it scales to 5, then cluster-operator would send aaddNodeandjoinClusterMQTT request for 2 added nodes. If pod is to be scaled down, then the cluster-operator would sendremoveNodeandleaveClusterMQTT API requests.

Recommended Setup

- 3 Control-plane node, 2 worker nodes and a NFS Server

- Management center (MMC) is configured to have a node affinity that means the pod for MMC will spawn on a specific worker node. The default configuration expects names of the worker nodes to be

openshift-test-rcjp5-worker-0andopenshift-test-rcjp5-worker-1. Given the nodes are named in similar fashion, MMC would be spawned onopenshift-test-rcjp5-worker-0. - If you want to have different names for your nodes you can also do that. You will have to adjust the hostnames of nodes in helm chart so that the MMC node affinity remains intact. To adjust the helm chart you will have to uncompress the helm charts and change the hostnames entries of

values.yaml. You can do so using the following command:tar -xzvf mosquitto-multi-node-multi-host-autoscale-0.1.0.tgzcd mosquitto-multi-node-multi-host-autoscale- Change the values of hostname from

openshift-test-rcjp5-worker-0/openshift-test-rcjp5-worker-1to the names of your machines. For eg,openshift-test-rcjp5-worker-0andopenshift-test-rcjp5-worker-1can be renamed asworker-node-0andworker-node-1. Doing this, MMC would now be spawned on the node namedworker-node-0. - Go back to the parent directory:

cd ../ - Package the helm chart to its original form using:

helm package mosquitto-multi-node-multi-host-autoscale

HA-PROXY Configurations

HA-proxy need to be configured accordingly for the Kubernetes setup. For eg server m1, m2 and m3 needs to be configured in this case. You would need to configure more server based on your requirements and based on the number mounts you have created on NFS. The autoscaling setup may scale up and down your deployment, so make sure you setup atleast 6 server entries in your haproxy.cfg. Instead of using docker IP we would use DNS names to address the pods. For eg

mosquitto-0.mosquitto.multinode.svc.cluster.local. Here mosquitto-0,mosquitto-1,mosquitto-2 are the name of individual mosquitto pods running as statefulsets. Each new pod would increase its pod-ordinal by 1. Template of the connection endpoints can be defined as follows

<pod-name>.<name-of-the-statefulset>.<namespace>.svc.cluster.local

In the below config, we have configured 6 servers:

Your setup folder comes along with a default configuration of haproxy config which is given below. This assumes that your using namespace name as "multinode". You can also change the namespace name if you want and the procedure to do it would be discussed at a later stage.

global

daemon

maxconn 10000

resolvers kubernetes

nameserver dns1 172.30.0.10:53 # Replace with your Kube DNS IP

resolve_retries 3

timeout retry 1s

hold valid 10s

frontend mqtt_frontend

bind *:1883

mode tcp

default_backend mqtt_backend

timeout client 10m

backend mqtt_backend

timeout connect 5000

timeout server 10m

mode tcp

option redispatch

server m1 mosquitto-0.mosquitto.multinode.svc.cluster.local:1883 check resolvers kubernetes on-marked-down shutdown-sessions

server m2 mosquitto-1.mosquitto.multinode.svc.cluster.local:1883 check resolvers kubernetes on-marked-down shutdown-sessions

server m3 mosquitto-2.mosquitto.multinode.svc.cluster.local:1883 check resolvers kubernetes on-marked-down shutdown-sessions

server m4 mosquitto-3.mosquitto.multinode.svc.cluster.local:1883 check resolvers kubernetes on-marked-down shutdown-sessions

server m5 mosquitto-4.mosquitto.multinode.svc.cluster.local:1883 check resolvers kubernetes on-marked-down shutdown-sessions

server m6 mosquitto-5.mosquitto.multinode.svc.cluster.local:1883 check resolvers kubernetes on-marked-down shutdown-sessions

172.30.0.10 is the Openshift DNS server IP. We add nameserver so that the HA-proxy do not crash when some of the servers are not available as in autoscaling the pods server may scale up and down.

Openshift Cluster Setup and Configuration

Dependencies and Prerequisites

As we chose to use a private cluster, therefore master and worker nodes would not have access to the internet. Therefore, we will install the dependencies on the bastion node and would also deploy the application from the bastion node.

Prerequisites

- Running Openshift OKD cluster

- Helm

- Bastion node with internet access (running Ubuntu in our case).

Once you have your OKD cluster up and running by following the official documentation guide of Openshift. We can then move onto the actual configuration and deployment of our Mosquitto application.

Setup the ha-cluster setups folder:

- Copy or setup the

mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshiftfolder to the Bastion node. Also make sure to create a directory inside the copied folder on Bastion node namedlicensethat contains thelicense.licfile we provided you. So the relative path would bemosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshift/license/license.lic.

- Copy or setup the

Create a namespace

- Create a namespace in which you would want to deploy the application. The deployment folder is pre-configured for the namespace named

multinode. If you want to use the default configuration you can create a namespace namedmultinodeusing the below command: oc create namespace multinode- If you want to use a different namespace, use the command:

oc create namespace <your-custom-namespace>. Replace<your-custom-namespace>with the name of the namespace you want to configure.

- Create a namespace in which you would want to deploy the application. The deployment folder is pre-configured for the namespace named

Create configmap for your license

- Create a configmap for your license key (same license you created ). You can create the configmap using the following command:

oc create configmap mosquitto-license -n <namespace> --from-file=<path-to-your-license-file>- Make sure the name of the configmap remains the same as

mosquitto-licenseas this is required by the deployment files and statefulsets. - A sample configmap creation command would look something like this if the choosen namespace is

multinodeand the license file is at the path/root/mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshift/license/license.lic:oc create configmap mosquitto-license -n multinode --from-file=/root/mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshift/license/license.lic

Setup NFS Server

Copy or setup the

mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshiftfolder to the NFS-Server.Install necessary dependencies

sudo apt-get updatesudo apt-get install nfs-kernel-serverConfigure exports directory.Open the

/etc/exportsfile on NFS-server. Expose the directories so that pods running on other worker nodes can access these directories and mount the volumes.The default starting point of the cluster is with 3 mosquitto broker nodes, however we will configure and expose a total of 6 mosquitto data directories along with a MMC config driectory in the NFS server. As the provisioning of data directories on the NFS servers are not dyanmic at the moment, configuring three extra mosquitto data directories allows the autoscaling to scale up till 6 pods seamlessly.

The helm charts therefore is also configured in a fashion that they create total of 7 persistent volumes and persistent volume claims (6 for mosquitto data directories and 1 for MMC). However, only three mosquitto broker would be spinned up by default.

You can use the following as a reference. Here we expose six mosquitto nodes and management center and the

mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshiftresides at/rooton our NFS server./root/mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshift/server1/mosquitto/data *(rw,sync,no_root_squash,no_subtree_check)

/root/mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshift/server2/mosquitto/data *(rw,sync,no_root_squash,no_subtree_check)

/root/mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshift/server3/mosquitto/data *(rw,sync,no_root_squash,no_subtree_check)

/root/mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshift/server4/mosquitto/data *(rw,sync,no_root_squash,no_subtree_check)

/root/mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshift/server5/mosquitto/data *(rw,sync,no_root_squash,no_subtree_check)

/root/mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshift/server6/mosquitto/data *(rw,sync,no_root_squash,no_subtree_check)

/root/mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshift/server1/management-center/config *(rw,sync,no_root_squash,no_subtree_check)On your bastion node: Check the allocated user id for your namespace after you already created your desired namespace (step 2). You can check the allocated user id for you namespace by running the command

oc describe namespace <namespace>where<namespace>is the namespace you chose while runningsetup.sh. For default namespace i.emultinode, the command would beoc describe namespace multinode.The above command would output a response. A sample output could be like:

Name: multinode

Labels: kubernetes.io/metadata.name=multinode

pod-security.kubernetes.io/audit=restricted

pod-security.kubernetes.io/audit-version=v1.24

pod-security.kubernetes.io/warn=restricted

pod-security.kubernetes.io/warn-version=v1.24

Annotations: openshift.io/sa.scc.mcs: s0:c27,c4

openshift.io/sa.scc.supplemental-groups: 1000710000/10000

openshift.io/sa.scc.uid-range: 1000710000/10000

Status: Active

No resource quota.

No LimitRange resource.Note down the value for

openshift.io/sa.scc.uid-range. In this is case it is1000710000. You would need these to give permissions to the exposed directories in NFS server and also while installing the helm chart.Make sure all the

datadirectories have adequate privileges so that mosquitto kubernetes pods can create additional directories inside thesedatadirectories. Therefore, we will now give adequate permission using the user id we noted in the previous step to all the relevantdatadirectories of mosquitto server andconfigdirectory of MMC using following command:

sudo chown -R 1000710000:1000710000 /root/mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshift/server1/mosquitto/data

sudo chown -R 1000710000:1000710000 /root/mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshift/server2/mosquitto/data

sudo chown -R 1000710000:1000710000 /root/mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshift/server3/mosquitto/data

sudo chown -R 1000710000:1000710000 /root/mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshift/server4/mosquitto/data

sudo chown -R 1000710000:1000710000 /root/mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshift/server5/mosquitto/data

sudo chown -R 1000710000:1000710000 /root/mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshift/server6/mosquitto/data

sudo chown -R 1000710000:1000710000 /root/mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshift/server1/management-center/configNote: We provide ownership of1000710000based on uid range of our namespace. Please note down and use the uid range of your own namespace in the previous step.Expose the directories using:

sudo exportfs -aRestart the kernel-server

sudo systemctl restart nfs-kernel-server

Installation

Prerequisites:

- Openshift OKD Cluster should be up and running.

- You have successfully created the namespace and configmap for your license (i.e

mosquitto-license). - You have configured your NFS Server by exposing the directories.

- We can now deploy our Mosquitto application on openshift using two different strategies:

Installation using Helm Charts:

Helm charts offer a comprehensive solution for configuring various Kubernetes resources—including stateful sets, deployment templates, services, and service accounts—through a single command, streamlining the deployment process.

Setup the folder on your Bastion Node:

- Make sure you have the

mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshiftfolder on the Bastion-node.

- Make sure you have the

Change Directory:

- Navigate to the project directory (i.e multi-node-multi-host).

cd mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshift/kubernetes/multi-node-multi-host-autoscale/

- Navigate to the project directory (i.e multi-node-multi-host).

Install Helm Chart:

Use the following

helm installcommand to deploy the Mosquitto application on to your OKD cluster. Replace<release-name>with the desired name for your Helm release and<namespace>with your chosen Kubernetes namespace:helm install <release-name> mosquitto-multi-node-multi-host-autoscale-0.1.0.tgz --set repoPath=<root-path-to-openshift-folder> --set runAsUser=<namespace-alloted-user-id> --set nfs=<your-nfs-ip> -n <namespace> --set imageCredentials.registry=registry.cedalo.com --set imageCredentials.username=<username> --set imageCredentials.password=<password> --set imageCredentials.email=<email>repoPath: Set therepoPathflag to the path where the foldermosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshiftresides on NFS server. In our case it exists on/root/mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshifttherefore therepoPathwould be/root.namespace: Set it to the namespace of your deployment.Note: If you want to deploy the setup in a different namespace other thanmultinode, make sure to pass a separate flag--set namespace=<your-custom-namespace>along with the helm installation command. This custom namespace must have have been created in step 2 of Openshift Cluster Setup and Configuration.namespace-alloted-user-id: Set it to user id you noted while setting up the NFS server.Note: You need to configure the IP of your NFS server by passing--set nfs=<your-nfs-ip>along with the helm installation command. Make sure you use the internal NFS ip accessible from within the OKD cluster and not the external IP exposed to the internet (in case you have one).Note: By default the HPA threshold is set to 60 . That mean Horizontal Pod Scaler will scale the pods if overall CPU consumption passes the 60% threshold. To set a new thresold, you can change pass--set hpa_threshold=<new_hpa_threshold>along with helm installation command.imageCredentials.username: Your docker username provided by Cedalo team.imageCredentials.password: Your docker password provided by Cedalo team.imageCredentials.email: Registered e-mail for accessing docker registry.Note: By default the max pod number is set to 5. That means tha HPA can only scale the max replica pods to 5. If you want set a new higher number, you can set it through NFS server IP is set it by passing--set max_replica=<your-max-replica-count>by passing it along with helm installation command. Make sure you have configured the servers in HACONFIG and also exported the data directories on NFS server for new potential pods/servers.So for eg: If you NFS IP is

10.10.10.10,your user id for your namespace is1000710000,mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshiftresides at the location/rooton your nfs, your name namespace istest-namespace,username,passwordandemailbedemo-username,demo-passwordanddemo@gmail.com, your new hpa threshold is 80 and max replica changed to 6 your arbitrary release name issample-release-namethen your helm installation command should be:helm install sample-release-name mosquitto-multi-node-multi-host-autoscale-0.1.0.tgz --set repoPath=/root -n test-namespace --set namespace=test-namespace --set nfs=10.10.10.10 --set runAsUser=1000710000 --set hpa_threshold=80 --set max_replica=6 --set imageCredentials.registry=registry.cedalo.com --set imageCredentials.username=demo-username --set imageCredentials.password=demo-password --set imageCredentials.email=demo@gmail.com

You can monitor the running pods using the following command:

oc get pods -o wide -n <namespace>To uninstall the setup:

helm uninstall <release-name> -n <namespace>

Your Mosquitto setup is now running with three single mosquitto nodes and the Management Center. To finish the cluster setup, the Management Center offers a UI to create the Mosquitto HA Cluster. The Management Center is reachable from the localhost via port 31021.

- To set up the cluster follow these steps.

- To be able to access this UI outside of your localhost go here

Further Useful Commands:

- If you want to change mosquitto.conf, you can do so by uncompressing the helm chart, making the required changes and packaging the helm charts again. The detailed procedure is mentioned below:

tar -xzvf mosquitto-multi-node-multi-host-autoscale-0.1.0.tgzcd mosquitto-multi-node-multi-host-autoscale/files/- Make changes to

mosquitto.confand save it. - Go back to the parent directory:

cd ../ - Package the helm chart to its original form using:

helm package mosquitto-multi-node-multi-host-autoscale - Uninstall helm package

helm uninstall <release-name> -n <namespace> - Reinstall the helm package using the same command you used the first time from the

mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscale-openshift/kubernetes/multi-node-multi-host/directory.

Installation using Openshift UI

Prerequisites:

- Openshift OKD Cluster should be up and running.

- You have configured your NFS Server by exposing the directories.

Installation

Navigate to the project directory .

cd mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshift/kubernetes/multi-node-multi-host-autoscale/mosquitto-ha-autoscale-openshift-ui/Log in to your Openshift UI Console.

Create Namespace:

- Navigate to

Administrationtab from side bar. - Click

Namespaces - Click on

Create Namespace. - Enter the name of the namespace you want to use. By default, the templates are configured with the namespace named

multinode. If you want to use the default namespace , enter the namespace asmultinode. - Set

Default network policyasNo restrictions. - Click

Create.

- Navigate to

Deploy Configmaps (License):

- Navigate to

Workloadstab from side bar. - Click

ConfigMaps. - Click on

Create ConfigMap. - Select

Form view - Name the configmap as

mosquitto-license, choose the configmap asimmutable. - Add your license file in Key-value pair section.

- Click

Create.

- Navigate to

Deploy Secrets:

- Navigate to

Workloadstab from side bar. - Click

Secrets. - Click on

Create Secretand selectImage pull secret. - Enter

Secret nameasmosquitto-pro-secret. - Choose

Authentication typeasImage registry credentials. - Enter

Registry server addressasregistry.cedalo.com. - Enter your

username,passwordandemailgiven by the Cedalo team. - Click

Create

- Navigate to

Deploy Service Accounts:

- Navigate to

User Managementtab from side bar. - Click

Service Accounts. - Click on

Create ServiceAccount. - Copy the content from

service_account.yamlfile inmosquitto-ha-autoscale-openshift-uifolder. Starting with service account namedha. - Paste the content and make sure to change your namespace from

multinodeto your chosen namespace. (you can leave it as it is if it is default one). - Click

create. - Repeat the steps for remaining service accounts namely

mosquittoandmmc. - At the end of this activity, you will have three different service accounts namely

ha,mosquittoandmmc.

- Deploy Configmaps:

- Navigate to

Workloadstab from side bar. - Click

ConfigMaps. - Click on

Create ConfigMap. - Copy the content from

config-json.yamlfile inmosquitto-ha-autoscale-openshift-uifolder. - Paste the content and make sure to change your namespace from

multinodeto your chosen namespace. (you can leave it as it is if it is default one). - Click

Create.

- Navigate to

- Similarly repeat the steps for

haconfig.yamlandmosquitto-config1.yamland create two different services.

- Deploy Services:

- Navigate to

Networkingtab from side bar. - Click

Services. - Click on

Create Service. - Copy the content from

service-ha-autoscale-openshift.yaml. - Paste it in the Yaml editor and make sure to change your namespace from

multinodeto your chosen namespace. (you can leave it as it is if it is default one). - Click

Create. - Similarly repeat the steps for

service-ha-autoscale.yamlandservice_statefulset.yamland create two different services. - At the end of this activity, you will have three different services namely

ha,mosquittoandmmc.

- Deploy Persistent Volume (PV):

- Navigate to

Storagetab from side bar. - Click

PersistentVolumes. - Click on

Create PersistentVolumes. - Copy the contents from

pv-autoscale.yaml. Start with copying the PV namedmosquitto-pv-0. - Paste it in the YAML editor and make sure to change your namespace from multinode to your chosen namespace. (you can leave it as it is if it is default one).

- Make sure that the path to

mosquitto-2.9-mmc-2.9-cluster-kubernetes-autoscaling-openshiftfolder on your nfs folder is correct. Default configuration expects the folder to be at/root. - Change the NFS server IP to your own NFS IP in each entry of Persistent Volume configuration.

- Click

Create. - Repeat the steps for creating remaining PVCs:

mosquitto-pv-1,mosquitto-pv-2,mosquitto-pv-3,mosquitto-pv-4,mosquitto-pv-5andmmc-pv

- Deploy Persistent Volume Claims (PVCs):

- Navigate to

Storagetab from side bar. - Click

PersistentVolumeClaim. - Click on

Create PersistentVolumeClaim. - Copy the contents from

pvc-autoscale.yaml. Start with copying the PVC namedmosquitto-data-mosquitto-0. - Paste it in the YAML editor and make sure to change your namespace from

multinodeto your chosen namespace. (you can leave it as it is if it is default one) - Click

Create. - Repeat the steps for creating remaining PVCs:

mosquitto-data-mosquitto-1,mosquitto-data-mosquitto-2,mosquitto-data-mosquitto-3,mosquitto-data-mosquitto-4,mosquitto-data-mosquitto-5andmmc-config

- Deploy Roles:

- Navigate to

User Managementtab from side bar. - Select

Rolesand then clickCreate Role. - Copy the contents from

clusterRoleDeployment.yaml. - Paste it in the YAML editor and

click Create. - Repeat the step for contents from

clusterRoleBinding.yaml. - Repeat the step for contents from

hpa-permissions.yaml. Distribute the configuration creation betweenRolesandRoleBinding

- Configure Metric-Server

- Open the

components.yaml. - You would notice that, the file contains lots of different types of configurations including

Role,clusterRoles,clusterRoleBinding,Services,DeploymentsandAPIService. - Based on the config type, copy and paste the configuration individually in the respective sections of the Openshift console. That means

Role,clusterRoles,clusterRoleBindingand be created inRoles/Rolesbindingsection underUser Management.APIServiceandServicecan be created from theServicesunderNetworkingtab and finallyDeploymentcan be created from theDeploymentsection underWorkloadstab. - Create each one of these configuration.

- Deploy Mosquitto (Statefulset):

- Navigate to

Workloadstab from side bar. - Click

StatefulSets. - Click on

Create StatefulSet. - Copy the contents from

statefulset-mosquitto.yaml, - Paste it in the YAML editor and make sure to change your namespace from

multinodeto your chosen namespace. (you can leave it as it is if it is default one). - Set the field

runAsUserto the your own user id. Default one is set to1000710000. You can find the user id of your namespace through the following command:oc describe namespace multinode. Refer NFS-Server section for more details. - Click

Create. After this activity, you would see statefulset pods scaling from 0 to 3.

- Deploy MMC (Deployment):

- Navigate to

Workloadstab from side bar, - Click

Deployments - Click on

Create Deployment. - Copy the contents from

deployment-mmc-autoscale.yaml, - Paste it in the YAML editor and make sure to change your namespace from

multinodeto your chosen namespace. (you can leave it as it is if it is default one). - As MMC pod has node affinity , set the value of the field

kubernetes.io/hostnameandvaluefield undernodeAffinityto the worker node hostname of where you want MMC pod to spin up. Default value is set toopenshift-test-rcjp5-worker-0. - Set the field

runAsUserto the your own user id. Default one is set to1000710000. You can find the user id of your namespace through the following command:oc describe namespace multinode. - Click

Create.

- Deploy HAProxy (Deployment):

- Navigate to

Workloadstab from side bar. - Click

Deployments. - Click on

Create Deployment. - Copy the contents from

deployment-ha-autoscale.yaml. - Paste it in the YAML editor and make sure to change your namespace from

multinodeto your chosen namespace. (you can leave it as it is if it is default one). - Click

Create.

- Deploy HPA (HorizontalPodAutoscaler):

- Navigate to

Workloadstab from side bar. - Click

HorizontalPodAutoscalers. - Click on

Create HorizontalPodAutoscaler. - Copy the contents from

mosquitto-hpa.yaml, - Paste it in the YAML editor and make sure to change your namespace from

multinodeto your chosen namespace. (you can leave it as it is if it is default one). - Choose the

minReplicasandmaxReplicas. Do note choosingmaxReplicaabove 6 will require additional configuration at helm chart level and NFS server level. - Choose the

averageUtilizationfield according to your need. Default is set to 60. - Click

Create.

- Deploy Cluster Operator (Deployment):

- Navigate to

Workloadstab from side bar. - Click

Deployments. - Click on

Create Deployment. - Copy the contents from

deployment-cluster-operator.yaml. - Paste it in the YAML editor and make sure to change your namespace from

multinodeto your chosen namespace. (you can leave it as it is if it is default one). - Click

Create.

Create Cluster in Management Center

After you have completed the installation process, the last step is to configure the Mosquitto HA cluster.

Access the Management Center and use the default credentials cedalo and password mmcisawesome.

- Make sure all three mosquitto nodes are connected in the connection menu. The HA proxy will only connect after the cluster is successfully set up.

- Navigate to

Cluster Managementand clickNEW CLUSTER. - Configure

Name,Descriptionand choose betweenFull-syncandDynamic Security Sync. - Configure IP address: Instead of private IP address we will DNS address.

- For node1:

mosquitto-0.mosquitto.multinode.svc.cluster.localand select broker2 from drop-down - For node2:

mosquitto-1.mosquitto.multinode.svc.cluster.localand select broker2 from drop-down - For node3:

mosquitto-2.mosquitto.multinode.svc.cluster.localand select broker3 from drop-down - Replace "multinode" with your own namespace. If you have used the default one, use the mentioned configurations.

mosquitto-0has to be mapped to the mosquitto-1 node in the MMC UI and so on.

- For node1:

- Click

Save

Connect to cluster

After the creation of the cluster, you can now select the cluster leader in the drop-down in the top right side of the MMC. This is needed, because only the leader is able to configure the cluster. The drop-down appears as soon as you are in one of the broker menus. Go to the "Client" menu and create a new client to connect from. Make sure to assign a role, like the default "client" role, to allow your client to publish and/or subscribe to topics.

Now you can connect to the Mosquitto cluster.

In this example command we use Mosquitto Sub to subscribe onto all topics:

mosquitto_sub -h <external-public-ip-of-bastion-node> -p 31028 -u <username> -P <password> -t '#'

Make sure to replace your IP, username and password.

Allow cluster access from outside the Bastion node

Most of the time it is not practical to have the Mosquitto cluster only locally available. To be able to access it from the outside, you can do so by adding an additional HAProxy on the Bastion node.

Setup HA Proxy

To access MMC and HAproxy connection over the internet, you can add an additional HAproxy service on Bastion node that would redirect traffic to your worker node which is running MMC and Haproxy (would again direct the traffic to Mosquitto brokers) pods. This is because the worker nodes do not have access over the internet. Below is an example how you can add extra frontend and backend to forward traffic to MMC and HA. Make sure to add the additional configuration of Haproxy on your own besides the below-mentioned config.

```

frontend localhost_31021

bind *:31021

option tcplog

mode tcp

default_backend servers_w0

backend servers_w0

mode tcp

server w0 10.1.20.2:31021

frontend localhost_31028

bind *:31028

option tcplog

mode tcp

default_backend servers_w1

backend servers_w1

mode tcp

server w1 10.1.20.2:31028 # Assuming 10.1.20.3 is the IP of server w1

```

You can then access the MMC console using external public ip of your bastion node on port 31021. Open using below url on your browser:

http://<external-public-ip-of-bastion-node>:31021 (MMC)

You can also port-forward your local port to the external Bastion node external IP for accessing it on the localhost.

Port forwarding to your localhost

By using a secure ssh connection it is possible to forward certain ports to your localhost. Use the following command:

ssh -L 127.0.0.1:31021:127.0.0.1:31021 -L 127.0.0.1:31028:127.0.0.1:31028 <external-public-ip-of-bastion-node>

Port 31021 gives access to the Management Center, while port 31028 gives access to the cluster leader node.

It is now possible to access the Management Center via your browser:

http://localhost:31021

Usage

Once the installation is complete, you can start using the multi-node Mosquitto broker. Be sure to check the Mosquitto documentation for further details on configuring and using the broker.