Mosquitto High Availability Cluster

Introduction

The Cedalo high availability feature provides the ability to run a cluster of Mosquitto nodes with a single leader and multiple followers, so that broker availability can be ensured even if a single node becomes unavailable through fault or for an upgrade. This document describes the recommended cluster architecture and how to configure the cluster for first use, and in the future.

Cluster Modes

There are two different cluster modes available:

Both modes use at least three brokers to synchronize information in case of a broker failure.

Full Sync: The first mode, acts as an active-passive cluster, meaning that only one of the three nodes is active and can have clients connect. The node that is active synchronizes MQTT session information and authentication information across the cluster. This means that if the active broker goes down, a client can reconnect to the new active leader exactly as if it were the original leader. This mode allows clients to communicate with one another. It is labelled "Full-Sync" in the MMC UI.

Dynamic-Security Sync: The second mode, acts as an active-active cluster, meaning that all three nodes can have clients connect to them at the same time. Just the dynamic security authentication is synchronized between nodes, meaning that clients can connect to any node and access topic as if it were any of the other nodes, but they are not able to communicate with any other given client. There is no MQTT information synchronized between brokers. This mode is useful for when clients do not communicate with each other, but send messages to a back end service that is also connected to each broker.

| Criteria\Setup | Single Node | HA - Full Sync (active-passive) | HA - Dynamic-Security Sync (active-active) |

|---|---|---|---|

| All Nodes Available | ✅ | ❌ Only leader | ✅ |

| MQTT Session Sync | Not needed | ✅ | ❌ |

| Authorization & Authorization Sync | Not needed | ✅ | ✅ |

Cluster architecture

Overview

The Mosquitto cluster comprises at least three nodes. A single node is available for use by MQTT clients at once, the other nodes operate as failover nodes. The cluster expects a minimum of two nodes to be available at once, to provide a leader and a fallback node. If the state of the cluster fails so that only a single node is available, clients will be unable to connect until the cluster is in a stable state again.

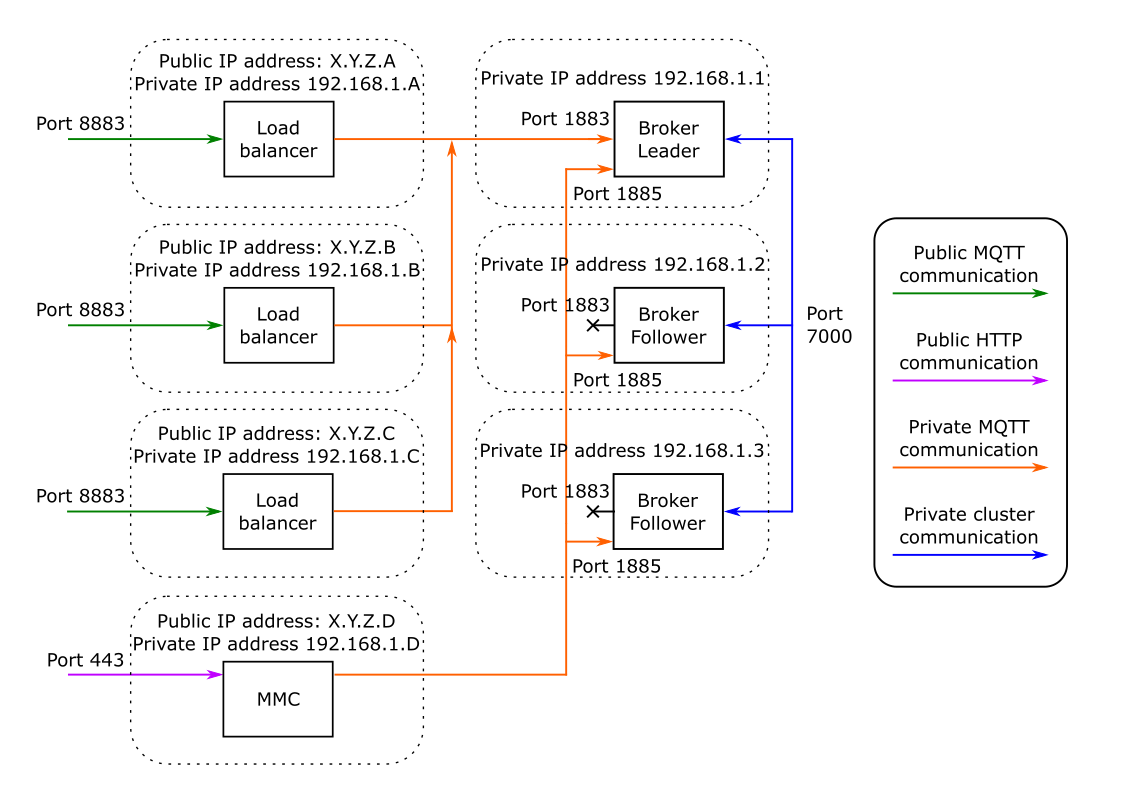

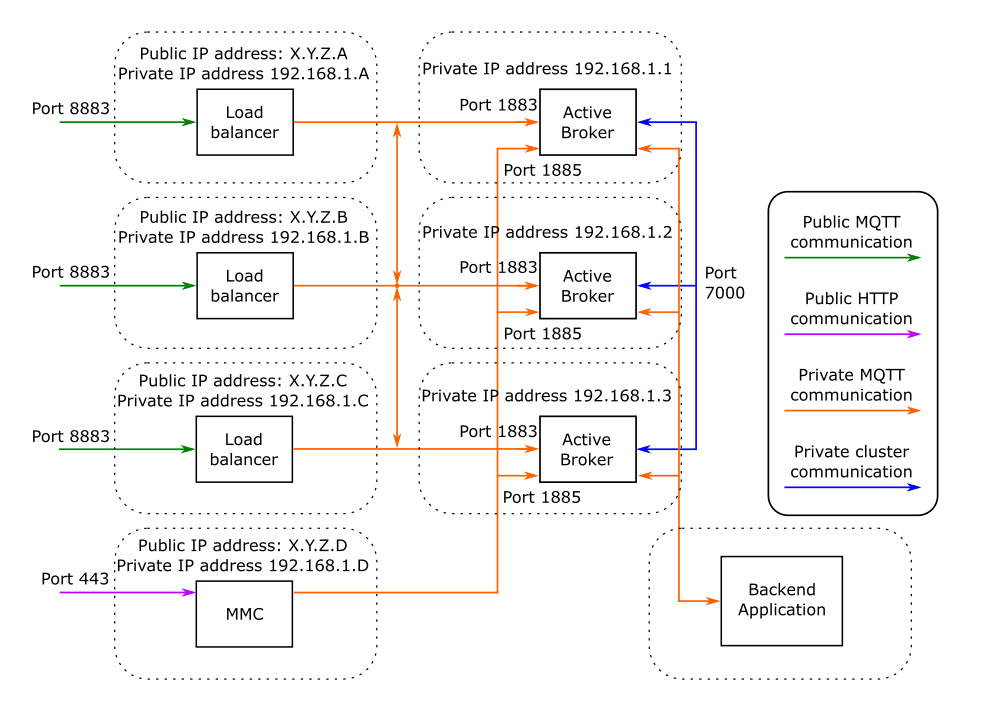

Figure 1 & 2 shows a suggested cluster architecture for the two available nodes. There are three broker nodes operating Mosquitto, and a fourth node providing a Management Center for Mosquitto instance (MMC).

Figure1 Full Sync Mode

Figure 2 Dynsec Sync Mode

The nodes operate with public and private network communication. The private communication includes node-to-node synchronization data, which is not encrypted and must be kept private. In this example, all private communication happens on a separate private network in the 192.168.1.* address space. If private IP addresses are not available or appropriate, then the private connection can be made using a VPN. Do not use a publicly accessible network for the cluster communication, or your credentials and data will be exposed to the internet even if your main MQTT communication is encrypted.

The load balancers listen on port 8883 in this example. They carry out TLS termination and forward connections. In case of the full sync mode, all connections are forwarded to the leader broker node on port 1883. While the dynsec sync mode allows forwarding to each broker.

Each broker node has port 1885 open to allow connections even when the node is not the leader. This port should only be used for cluster configuration and inspection. Any other use is not covered by HA. This especially important for the full sync mode.

It is strongly recommended that all public communication is encrypted. If required, using port 1883 for unencrypted connections can be done by exposing the port on the load balancer and forwarding it to the broker port 1883.

In this example there are three separate load balancers, and three separate broker nodes, with the broker nodes having no public networking. The purpose of the load balancer is threefold: to provide TLS/SSL termination, to route client connections to the currently available leader, and to provide separation between the public and private networks.

Other arrangements are possible, for example combining the load balancer and broker on nodes may be desirable in simple clusters where keeping node count low is required.

Docker image installation

The Docker image is at registry.cedalo.com/mosquitto/mosquitto:2.7. Cedalo Registry Credentials are needed to access this image.

Example docker-compose file showing the configuration described in the diagram.

version: '3.7'

services:

mosquitto:

image: registry.cedalo.com/mosquitto/mosquitto:2.7

ports:

- <private-network-ip>:1883:1883

- <private-network-ip>:1885:1885

- <private-network-ip>:7000:7000

volumes:

- ./mosquitto/config:/mosquitto/config

- ./mosquitto/data:/mosquitto/data

networks:

- mosquitto

environment:

CEDALO_LICENSE_FILE: /mosquitto/data/license.lic

CEDALO_HA_DATA_DIR: /mosquitto/data/ha

MOSQUITTO_DYNSEC_PASSWORD: <admin password to use when generating config>

restart: unless-stopped

networks:

mosquitto:

name: mosquitto

driver: bridge

The suggested directory layout for the volume to be mounted on the Mosquitto Docker container is:

/mosquitto/docker-compose.yml

/mosquitto/mosquitto/config/mosquitto.conf

/mosquitto/mosquitto/data/license.lic

/mosquitto/mosquitto/data/ha/

Broker Configuration

The suggested broker configuration file for each node is given below:

global_plugin /usr/lib/cedalo_mosquitto_ha.so

enable_control_api true

allow_anonymous false

# The listener for receiving incoming MQTT connections from the load balancer.

# This listener will be automatically closed when this node is not the cluster

# leader.

listener 1883

# Optional websockets listener, if used this must be exposed in the

# docker-compose configuration

#listener 8080

#protocol websockets

# The listener for receiving the MMC connection / API control of a follower node.

# This listener will remain open regardless of the role of the node in the

# cluster. It should not be used by general purpose MQTT clients and is not

# HA supported.

listener 1885

admin_listener true

# Some sensible options - tweak as per your requirements

# Set max_keepalive to be reasonably higher than your expected max value

max_keepalive 1800

# max_packet_size can protect devices against unreasonably large payloads

max_packet_size 100000000

# Reduce network latency

set_tcp_nodelay true

In addition, environment variables must be set to configure the license file and HA data path, as demonstrated in the docker-compose example above:

The MOSQUITTO_DYNSEC_PASSWORD variable is not required, but allows the initial authentication/authorisation configuration to be generated with an admin user using this password, which is convenient when generating configurations for multiple nodes. If this is not set, then each node will generate its own admin password on startup and save a plain text copy at /mosquitto/data/dynamic-security.json.pw The cluster configuration is controlled through the HA MQTT topic API described below.

Load balancer configuration

The load balancer should be configured to listen on the public address for the node, to terminate TLS/SSL connections, and to forward the client connections to the private addresses of the cluster nodes. In this example the broker port is 1883.

If the load balancer has an idle timeout value that disconnects clients if no network traffic is observed for a given period, this should be configured to be greater than the MQTT keepalive value you intend to use with your clients, otherwise there will be frequent disconnections for your idle clients. Many MQTT clients use a default of 60 seconds keepalive.

An example for HAProxy is given below

Full Sync HA Proxy Configuration

global

user haproxy

group haproxy

daemon

nbproc 4

nbthread 1

maxconn 4096

# Default SSL material locations

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

# Default ciphers to use on SSL-enabled listening sockets.

# For more information, see ciphers(1SSL). This list is from:

# https://hynek.me/articles/hardening-your-web-servers-ssl-ciphers/

# An alternative list with additional directives can be obtained from

# https://mozilla.github.io/server-side-tls/ssl-config-generator/?server=haproxy

ssl-default-bind-ciphers ECDH+AESGCM:DH+AESGCM:ECDH+AES256:DH+AES256:ECDH+AES128:DH+AES:RSA+AESGCM:RSA+AES:!aNULL:!MD5:!DSS

ssl-default-bind-options ssl-min-ver TLSv1.2 no-tls-tickets

ssl-dh-param-file /etc/haproxy/dhparams.pem

defaults

log global

mode tcp

option dontlognull

timeout connect 5000

timeout client 2m

timeout server 2m

frontend mosquitto_frontend

bind *:8883 ssl crt /etc/haproxy/server.comb.pem

mode tcp

option tcplog

default_backend mosquitto_backend

backend mosquitto_backend

mode tcp

option tcplog

option redispatch

server node1 192.168.1.1:1883 check

server node2 192.168.1.2:1883 check

server node3 192.168.1.3:1883 check

Dynsec Sync HA Proxy Configuration

# This is node1. The config needs adapting when used on node2 and node3.

# node1.example.com has private IP address 192.168.1.1

# node2.example.com has private IP address 192.168.1.2

# node3.example.com has private IP address 192.168.1.3

global

log /dev/log local0

user haproxy

group haproxy

daemon

stats socket :9999 level admin expose-fd listeners

localpeer node1.example.com

nbthread 2

maxconn 30000

# Default SSL material locations

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

# Default ciphers to use on SSL-enabled listening sockets.

# For more information, see ciphers(1SSL). This list is from:

# https://hynek.me/articles/hardening-your-web-servers-ssl-ciphers/

# An alternative list with additional directives can be obtained from

# https://mozilla.github.io/server-side-tls/ssl-config-generator/?server=haproxy

ssl-default-bind-ciphers ECDH+AESGCM:DH+AESGCM:ECDH+AES256:DH+AES256:ECDH+AES128:DH+AES:RSA+AESGCM:RSA+AES:!aNULL:!MD5:!DSS

ssl-default-bind-options ssl-min-ver TLSv1.2 no-tls-tickets

defaults

mode tcp

option dontlognull

timeout connect 5000

# The client and server timeout control when idle connections are

# closed. We have long running connections which may be idle. The

# longest idle time for an MQTT connection is set by the keepalive

# interval. If the haproxy timeouts are less than the MQTT keepalive

# values in use, then haproxy will disconnect clients before they get

# the chance to send a PINGREQ, if no other traffic is being sent.

# A typical keepalive is 60 seconds.

timeout client 70000

timeout server 70000

peers mypeers

peer node1.example.com *:10001

peer node2.example.com 192.168.1.2:10001

peer node3.example.com 192.168.1.3:10001

frontend mqtt_frontend_tls

bind *:8883 ssl crt /usr/local/etc/haproxy/certs/server-crt-key.pem

default_backend mqtt_backend

tcp-request inspect-delay 10s

tcp-request content reject unless { req.payload(0,0),mqtt_is_valid }

backend mqtt_backend

stick-table type string len 100 size 50000 expire 24h peers mypeers

stick on req.payload(0,0),mqtt_field_value(connect,username)

option redispatch

server node1.example.com 192.168.1.1:1883 check on-marked-down shutdown-sessions

server node2.example.com 192.168.1.2:1883 check on-marked-down shutdown-sessions

server node3.example.com 192.168.1.3:1883 check on-marked-down shutdown-sessions

Control API for Cluster

The following section presents the MQTT Control API for HA. Each payload has to be published to the $CONTROL/cedalo/ha/v1 topic. To get the response of the executed command you have to subscribe to the $CONTROL/cedalo/ha/v1/response topic.

Creating the Cluster

A cluster can be fully managed by the Management Center. If you choose not to use it, use the following API commands for the creation.

Configuring the first node

Send the createCluster command to the first node to initialize the cluster. The nodes array contains a list of at least three nodes to be used for the cluster. The address and port of each node are the private network address of that node and the port to be used for cluster communication. The nodeid is a unique integer for the node within the cluster, in the range 1-1023. The mynode boolean must be set to true on the details of the node where the command is being sent.

The command should be sent directly to the node from within the private network, rather than through the load balancer.

{

"commands": [

{

"command": "createCluster",

"clustername": "name",

"nodes": [

{ "address": "192.168.1.1", "port": 7000, "nodeid": 1, "mynode": true },

{ "address": "192.168.1.2", "port": 7000, "nodeid": 2 },

{ "address": "192.168.1.3", "port": 7000, "nodeid": 3 }

]

}

]

}

With the Cluster creation a leader is automatically elected. All nodes are synced and now work via the leader authentication.

Joining the nodes to the cluster

Once the first cluster node is configured, the nodes in the list must be told to join the cluster. Send the joinCluster command to each new cluster node. The nodes list must include details of the cluster as in the createCluster command, with the new node details having mynode set to true. All addresses and ports are for the private network and cluster port.

{

"commands": [

{

"command": "joinCluster",

"clustername": "name",

"nodes": [

{ "address": "192.168.1.1", "port": 7000, "nodeid": 1 },

{ "address": "192.168.1.2", "port": 7000, "nodeid": 2, "mynode": true },

{ "address": "192.168.1.3", "port": 7000, "nodeid": 3 }

]

}

]

}

Adding further nodes

To add further nodes at a later date requires two steps:

- Informing the existing cluster of the new node

- Telling the new node to join the cluster.

Informing the cluster

Send the addNode command to the existing cluster node. This must be sent to the leader node. Port 1883 will only be available for the leader node, port 1885 will be available on each node, but only the leader will accept cluster commands.

{

"commands": [

{

"command": "addNode",

"clustername": "name",

"address": "192.168.1.4",

"port": 7000,

"nodeid": 4

}

]

}

Joining the node to the cluster

Send the joinCluster command to the new cluster node. The nodes list must include details of the existing cluster nodes, with mynode omitted or set to false, and the new node details with mynode set to true. All addresses and ports are for the private network and cluster port.

It is strongly recommended adding nodes one at a time with the addNode and joinCluster commands, then verify the cluster is operating correctly before adding further nodes. If multiple nodes are added using addNode without those nodes joining the cluster, then it is possible that the cluster will be unable to maintain consensus which would adversely affect the HA capability.

Removing nodes from Cluster

Inform Cluster Leader

To remove a node from the cluster, send the removeNode command to the cluster leader.

{

"commands": [

{

"command": "removeNode",

"clustername": "name",

"address": "192.168.1.2",

"port": 7000,

"nodeid": 2

}

]

}

All details must match for the node to be removed.

The cluster will refuse to remove a node if it will result in the cluster having fewer than three nodes.

Leave Cluster

Once the Cluster leader is informed, remove the node from the cluster. The command should be sent to the node that is to leave the cluster, not the cluster leader. The clustername, address, port, and nodeid must match.

{

"commands": [

{

"command": "leaveCluster",

"clustername": "name",

"address": "192.168.1.2",

"port": 7000,

"nodeid": 2

}

]

}

Deleting the cluster

Send the deleteCluster command to the cluster leader. All nodes will retain their current settings, but will no longer be part of a cluster.

{

"commands": [

{

"command": "deleteCluster",

"clustername": "name"

}

]

}

Getting cluster information

Send the getCluster command to the cluster leader.

{

"commands": [

{

"command": "getCluster"

}

]

}

Getting node information

Send the getNode command to any individual node. Nodes are always available for communication on port 1885.

{

"commands": [

{

"command": "getNode"

}

]

}

Set the cluster leader

Send the setLeader command to promote the specified node to be the cluster leader.

{

"commands": [

{

"command": "setLeader",

"nodeid": 4

}

]

}

Cluster Monitoring Documentation

Effective cluster monitoring is pivotal for maintaining the health, performance, and reliability of your system. This guide introduces three methodologies for monitoring your cluster's state, utilizing system topics, the Metrics Plugin, and the Audit Trail Plugin. Each method provides unique insights and operational benefits.

Overview of Monitoring Methods

1. System Topics

System topics offer real-time insights into the cluster's state, facilitating immediate recognition and resolution of potential issues.

- Total Nodes:

"$SYS/broker/cedalo/ha/voting_nodes/total"reflects the total count of nodes within the cluster, essential for understanding its overall capacity. - Online Nodes:

"$SYS/broker/cedalo/ha/voting_nodes/online"indicates the number of currently active nodes. Discrepancies between this figure and the total node count can highlight issues within the cluster.-1indicates that the broker is a follower.

Monitoring these topics is critical for maintaining cluster integrity and performance.

2. Metrics Plugin

The Metrics Plugin for Pro Mosquitto parallels the system topics' functionality, offering an integrated approach to monitoring through:

- Cluster Capacity:

mosquitto_ha_voting_nodesprovides a count of the total nodes, akin to the information from the$SYS/broker/cedalo/ha/voting_nodes/totaltopic. - Active Nodes:

mosquitto_ha_voting_nodes_onlinetracks the number of online nodes, similar to the$SYS/broker/cedalo/ha/voting_nodes/onlinetopic.

This plugin enhances operational oversight by facilitating the integration of cluster state metrics into broader monitoring systems.

3. Audit Trail Plugin

Expanding upon the capabilities of the Metrics Plugin, the Audit Trail Plugin for Pro Mosquitto offers advanced monitoring through event-driven insights and comprehensive logging:

- Event-Driven Monitoring: Configure alerts and automations based on specific cluster state changes to improve response times and operational efficiency.

- Audit Trails: Generate detailed logs for state changes within the cluster, aiding in troubleshooting and historical analysis.

Ensure the inclusion of the "ha" module in your audit-trail.json configuration file's filter section to capture all relevant cluster health and state change events.

Possible Cluster States:

- stable: The cluster has sufficient voting nodes in contact with the leader to be able to suffer the loss of at least one node without issue.

- degraded: The cluster has the minimum number of voting nodes in contact with the leader, and hence is at risk of going offline if a single node disconnects.

- offline: The cluster does not have a majority and is offline.

Example Log:

{

"timestamp": "2024-03-13T13:33:46.036Z",

"hostname": "mosquitto",

"source": "ha",

"operation": "clusterStatus",

"params": { "status": "degraded" },

"operationType": "update"

}

Leveraging these three methods provides a comprehensive monitoring solution, enhancing your ability to maintain a stable and reliable cluster environment.

Cluster System Requirements

On each High-Availability node the components need the following system resources:

Server hardware

For the Load Balancer we recommend HAProxy. The recommended hardware components for the instance would be: Recent 4+ core CPU (AMD or Intel) and 8GB of RAM, if Load Balancers run separately, 8+ cores if load balancers run on same hosts as the Mosquitto nodes. As storage, 5 GB (R/W speed at least 500 MB/s) in a RAID is recommended.

| Mosquitto Node | Load balancer | Mosquitto Node + Loadbalancer | |

|---|---|---|---|

| CPU: | 4+ Cores | 4+ Cores | 8+ Cores |

| RAM: | 8GB | 8GB | 8GB |

| Storage: | 5GB (500Mb/s +) |

In case stream processing is used, then the following recommendations apply for each node. While the CPU recommendations stay the same, it is proposed to use 24 GB of RAM and 50 GB (R/W speed at least 500 MB/s) of storage.

Stream Plugin usage:

| Mosquitto Node | Load balancer | Mosquitto Node + Loadbalancer | |

|---|---|---|---|

| CPU: | 4+ Cores | 4+ Cores | 8+ Cores |

| RAM: | 24GB | 24GB | 24GB |

| Storage: | 50GB (500Mb/s +) | 50GB (500Mb/s +) | 50GB (500Mb/s +) |

Network

As shown in the cluster architecture, the following network system is required.

All nodes need to have access to a public network with public IPs (where MQTT clients and browser to view MMC information reside). A private network with private IPs (for communication between nodes, load balancers, and between nodes and Management Center). If this is not explicitly possible then a VPN can be pulled up to privately connect the components. Private network communication is done via TCP, while the Load Balancer are used for SSL termination.

All nodes are 100% replicas from each other and get synchronized in real-time via the private network.

Network speed: Depending on expected bandwidth, 100 Mbit or 1 Gbit network is recommended.

The Network speeds always need to be aligned with desired message bandwidth from your MQTT clients. The upper limit for bandwidth that the Mosquitto cluster can cope with is governed by a lot of factors, however 30 MB/sec or 240 Mbit is an ultimate upper limit what a Mosquitto node can cover under certain circumstances. Therefore, it is recommended that the network between the nodes is at least a Gbit network.